By Bob Reselman, Software Developer and Technology Journalist

The value proposition for migrating to the cloud is compelling. You don’t need to staff a department full of systems engineers and admins to provision and maintain a company’s infrastructure. Instead, infrastructure has been abstracted away by the service provider, thus allowing a small team to use automation to expand or contract the computing environment to meet the needs at hand. And when it comes to the cost of computing resources, you only pay for consumption. Nothing drives a CFO closer to the edge of despair than a room full of underutilized hardware eating up the company’s financial resources, producing no return.

So, for cloud computing, what’s not to like? Not much. The migration to cloud computing is growing among companies large and small. By 2024, Gartner projects that more than 45% of IT spending on system infrastructure, infrastructure software, and business process outsourcing will shift from traditional solutions to the cloud.

The bad news is that migrating to the cloud successfully does not happen by magic. It’s not as simple as writing some scripts and paying the bill at month’s end. Many of the issues needed to be addressed in an on-premises environment are still outstanding in the cloud, particularly when it comes to application performance. Migrating applications to the cloud presents new issues, in addition to those typically found in on-premises environments. However, just because there are issues, it does not necessarily follow that cloud migration is a bad idea. There is a lot of benefit to migrating to the cloud provided that your company plans wisely. The key is to understand the issues associated, using a solid plan that addresses them, particularly for load testing.

The following sections describe the three key considerations to address as you plan to load test your applications when migrating to the cloud.

1. Make sure your testing plan matches your migration pattern

There are five patterns of cloud migration. You can rehost the application on an infrastructure as a service (IaaS) platform. This includes using the cloud to provide raw virtual servers and network capabilities. Work required consists of complete provisioning of OS setup to network configuration. Then, once the infrastructure is created, you add in applications and data.

The next approach is to refactor the application for the platform as a service (PaaS) deployment. It’s similar to the rehost pattern, except with PaaS the operating system is already installed as there are more typical applications included, like SSH, database, and web servers.

You can revise the application for use in either IaaS or PaaS deployment. The revised pattern means that you are updating code to work in the given environment. Revision can also involve altering the way security works or how IP addresses are allocated.

The rebuild factor means you are making considerable changes to the application to enable it to work in a particular PaaS. For example, creating an entirely new application that takes advantage of specific features native to the cloud implementation.

Likewise, there can be a replace pattern that results in the creation of a brand new approach to the application’s architecture as it delivers features to your users. An example of using the replace pattern would be when you migrate from an application using VMs or containers maintaining instances of Tomcat, MySQL and RabbitMQ installed to a serverless approach (and serverless functions, databases, and messaging system native to the cloud providers).

Of course, each approach has its boundaries and ramifications. Thus, load testing methodologies will vary according to the method. Consider this: when you are rehosting an application, load testing will focus more on the overall environment. Do you have enough network bandwidth? Are your VMs provisioned with enough CPUs, memory, and disk to support the load?

When you are replacing a server-based architecture with a serverless approach, load testing needs to focus on application performance at access points rather than on the internal organs of the infrastructure. You have control over the code and data center regions. You do not have control over CPU or memory allocation, which is done by the service provider; hence, the focus on application behavior.

Testing costs time and money. Having a clear understanding of which of the five migration patterns are in play will help you use your company’s resources wisely.

2. Address the myth of cloud elasticity

Elasticity is characteristic allowing a cloud service to automatically increase and decrease computing capacity to meet the current need. This is good news in that customers pay only for the resources they use. The bad news is that the notion of elasticity creates a myth. The myth being that once the provisioning technology senses thresholds are being met, new servers will magically appear to save the day. Sure, the provisioning technology might detect that disaster is present. But the problem is that by the time the additional VMs are spun up, it could be too late. A complex VM can take 10 to 15 minutes to get up and running. Containers can launch faster but still take time. The trick is to avoid disaster before it occurs. Companies living and dying on capacity planning understand the ebb and flow of demand. Instead of relying on elasticity automation to ensure that infrastructure capacity meets consumption needs, they anticipate demand and spin up accordingly. For example, Netflix understands that peak usage hours are during movie viewing time between 6 and 10 PM. As a result, they spin up nodes beforehand to meet the demand of these mission-critical hours, using well-defined provisioning templates.

What does this mean regarding load testing?

Load testing is not a one-scenario-fits-all undertaking. Instead, you need to take a look at all aspects of the computing environment, emulating accordingly. This means that you have a detailed understanding of the provisioning environments to be followed and the conditions under which expansion and contraction are to take place. For example, if you are load testing to support peak usage, make sure you are creating the required number of virtual users to mimic usage, making sure the computing environment is provisioned according to what’s to be used in production. Also, make sure you accurately mirror the ebb and flow of expansion and contraction. Issues usually don’t happen when everything is as it should be. Usually, things go awry when you transition capacity up and down.

Finally, if your company is relying on some automation that does provision on demand, make sure that you create threshold situations that are defined in well-known service level agreements. Don’t count on assumptions; be fact-based when testing. If you find that automated provisioning works for you, great. Know that for load testing, consider the presence of any approach to elasticity a nice-to-have. It’s better to break a predefined computing environment and be safe than accommodate an assumed one and be sorry.

3. Proceed with caution with serverless functions

What’s not to like about serverless functions? All you need to do is deploy code and wire it into the infrastructure. It doesn’t matter if 10 or a million calls are being made on the code. Making sure there is capacity in place to support the demand is the responsibility of the service provider. What could go wrong, you ask?

function sortKidsByAge(kids) {

kids.sort(function(a, b) {

return a.age < b.age? -1: 1

})

}

What is displayed above is an accident waiting to happen. It’s an array sorting function, written in JavaScript that can bring the entire event loop of a serverless function written under Node.js to a grinding halt. When the array being sorted is small, the problem remains hidden. When the array is large enough (containing a million items, say), which is not unusual in web-scale applications, all other activity in the underlying host running the Node.js application is blocked out until the sorting completes. The impact on performance is undeniable. It doesn’t matter if the function is server or serverless — the code is going to run at a snail’s pace when the sorting array gets too big. In a serverless environment, the host might ramp up the infrastructure to assuage the bottleneck. Yes, the service provider will ramp more instances of incorrect code behind the scenes to account for the shortcoming. These instances will cost you in the long run. The best remedy is just to fix the code.

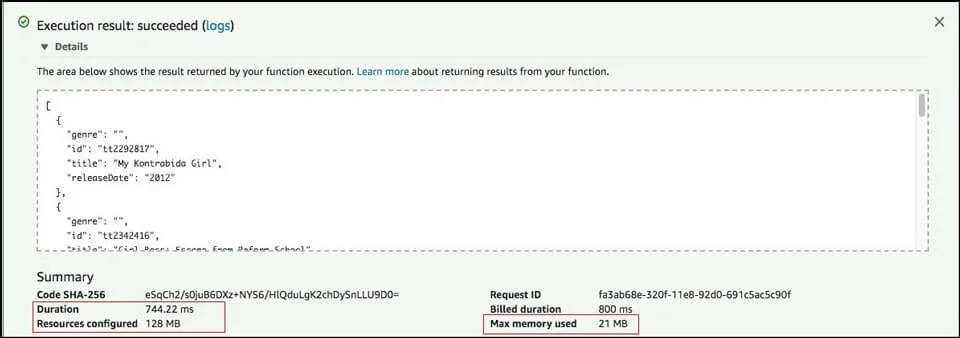

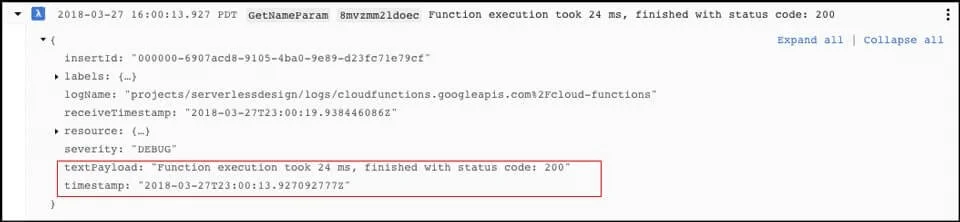

What’s the takeaway? Performance is about behavior, not the environment. When load testing, it’s critical to measure as many aspects of application behavior as possible (as reason permits). Regarding the evaluation of application performance using serverless functions, it’s a good idea to gather the time to execute and memory usage of the given service (see Figs. 1 and @ below).

Figure 1: AWS Lambda provides information about memory usage and function duration

Figure 2: Google Cloud Functions reports function duration

Typically, in serverless functions, CPU allocation is not published to consumers. So, this aspect will probably not be available for measurement. Still, the bottom line is that serverless functions can cause code-level performance issues. Thus, you need to gather as many metrics as possible to observe behavior accurately.

Putting it all together

When moving to the cloud, few organizations are translating their current architecture into cloud instances. Taking the time to identify which pattern of migration you’re planning — rehost, refactor, revise, rebuild, or replace — is useful to determine the scope of work associated towards migration and to understand load testing required to allow the cloud instance to withstand anticipated use.

It’s also essential when load test planning to make sure that cloud instances can scale up and down to meet operational needs as defined by the service level agreement(s). Whether your organization is relying on inferred elasticity to satisfy capacity requirements or applying a more proactive approach using scripted scaling relative to peak usage parameters and predefined instance templates, all testing must reflect the scope of autoscaling. This means making sure that your load tests create virtual users according to each defined usage scenario and that the specification for each cloud environment intended to support a given usage scenario is part of the test plan. Lastly, as serverless functions become more prevalent in cloud computing, extra attention needs to be given to measure the behavior of the services under test. Because a feature is serverless does not mean that it will be automatically configured for optimal performance. Incorrect code is wrong regardless of whether it’s running in an application on a virtual machine or in a serverless function. Performance vigilance is important!

There’s a lot of benefit to migrating to the cloud. Your company can save money and increase the volume and quality of service it provides to its users. The trick is to ensure that comprehensive, state-of-the-art load testing is part of the migration process.

This post was originally published in April 2018 and was most recently updated in July 2021.

Bob Reselman’s profile on LinkedIn