Test Failure Prioritization and Healing

Goal: Automatically identify and resolve test failures that don’t indicate a problem in the application under test

Every morning, developers and testers grab their coffee and start chipping away at all the test failures reported for the nightly regression tests. Even with stable test cases, the number of test failures increases exponentially as test automation rates, test run frequency, and system complexity are all rising in parallel.

A test failure could be a sign that the latest application changes broke business-critical functionality…or it could stem from a number of other issues. For example, a dependent system (e.g., a third-party application or API) may be temporarily unavailable, broken, or so slow that it’s causing the test to time out. A simulated test environment might not be available or functioning correctly. Or, the test data provisioning process might be feeding the test expired or inappropriate data.

Diagnosing the root cause of a single test failure can take anywhere from minutes to hours. Given that extensive regression test suites can easily produce upwards of 500 test failures, streamlining the diagnosis process could yield significant productivity gains.

Using AI and Machine Learning

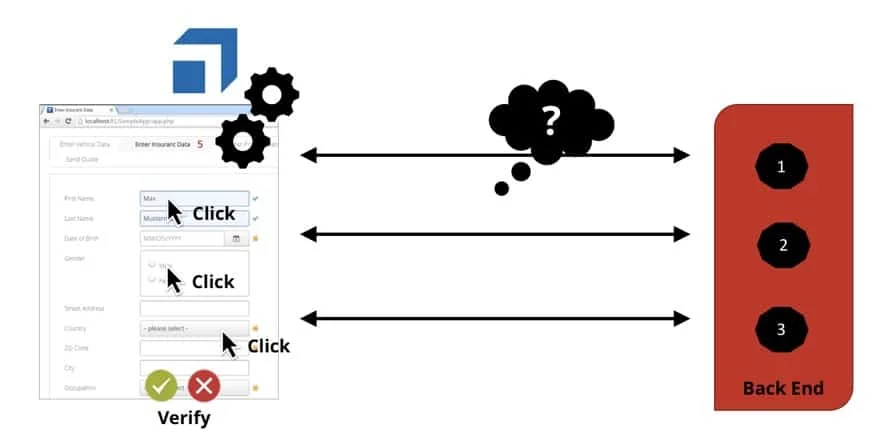

Using AI and machine learning, Tricentis helps teams understand which API and UI test failures indicate actual application issues—as well as resolve the root cause of test failures caused by extrinsic problems. By watching the system’s back-end behavior during both passed and failed tests, we learn which variations are acceptable and which are problematic. We then use this insight to predict the root cause of a test failure. If we determine that a test is failing due to an issue with the application under test, we provide developers the most critical details for debugging and resolving the issue.

In many cases, we can also automatically “heal” the root cause of a test failure. For example, assume that we deduce that a test is failing because it’s trying to interact with a third-party API that’s temporarily unavailable. We can automatically simulate the behavior of that API based on previous successful tests runs—enabling testing to proceed as normal.

Next: Portfolio Inspection

Back to AI in Testing Overview