From agentic AI to API simulation: 5 Tosca features that prepare your organization for the future

From agentic AI to API simulation, Tosca’s cloud deployment is...

Find out what Mikko Larkela, CEO and Founder of mySuperMon, experiences when measuring database performance as part of automation testing.

By Mikko Larkela, CEO and Founder, mySuperMon

When measuring database performance is done as part of automation testing, I experience interesting and useful results.

Here are the areas for running one of the test cases:

In a large enterprise application, small code changes are usually reflected in many different services.

For example, new functionality is added to the user interface. The information is not available with the current service, so the developer adds a new search to the general service by adding a new SQL statement to the service to extract the new data. In the local test everything works quickly and conveniently. In the acceptance test environment, automation tests, etc., look green, using a small test environment database.

However, it is not as simple as that.

The service change made for the new column is therefore very poorly implemented, there are iteration lists, and several unnecessary SQL statements are executed to database.

Problems are only beginning to arise: several batches notice slowness, and web services are also detected as being slow.

This begins the start of finding out where the fault lies, and several days will easily pass before the fault is found. In many cases, due to such a small change, a comprehensive performance test is not carried out, but problems only begin to arise in production. Comprehensive enterprise application performance testing usually requires a lot of calendar time, and correcting the findings delays the entire delivery schedule.

Now that we are adding database monitoring as part of the application’s automation testing, we can immediately catch the number of SQL statements that have changed in different services. In this case, for example, the change to the general service affected many different use cases.

Another similar problem occurs when a developer changes a SQL statement that is in continuous use, for example, by adding a new subquery to the query. In this case, select starts scanning the index several times. This type of problem cannot be found in even smaller test databases, as the database engine reads the entire table quickly. Slowness only begins to come when the application is tested in a production-like environment.

According to AppDynamics, more than half of application performance bottlenecks originate in the database. But most application teams have little or no visibility into database performance.

When we add database monitoring as part of the application’s normal automation tests as well as performance tests, we immediately catch up with the number of rows read that have changed in different services compared to the situation before the change. In large enterprise projects, database monitoring as part of performance testing significantly speeds up the correction of performance issues, and this way the entire project has a better chance of success.

mySuperMon is a leader in use case-based database performance monitoring, and Tricentis Neoload is a leader in load testing, but while putting the extra load on applications, Neoload doesn’t have the complete details about the database for the specific use case — and that is the important information mySuperMon provides to NeoLoad so users can check the live details and act on them.

With these details mySuperMon also provides complete statistics to NeoLoad so users can check the complete status about the reason for the spike along with events.

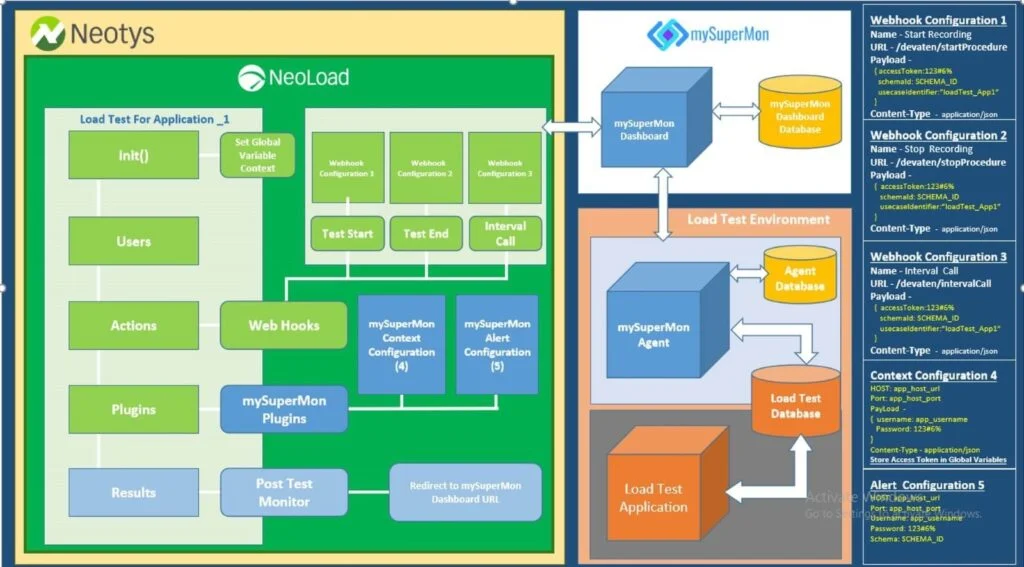

Let’s go with the architectural diagram of how mySuperMon integrates with NeoLoad. e will check the architectural diagram first and then go step by step through how to configure it.

In the diagram above, we have configured the application context and then use that application context for webhooks to call mySuperMon.

The application context will set through the NeoLoad GUI and the webhook will use that information.

The NeoLoad webhook will communicate with the mySuperMon API to start, stop and run details. That webhook will store the information to the NeoLoad dashboard.

Add mySupermon plugin to NeoLoad. The mySuperMon team will provide you with the mySuperMon plugin to configure it in the NeoLoad GUI.

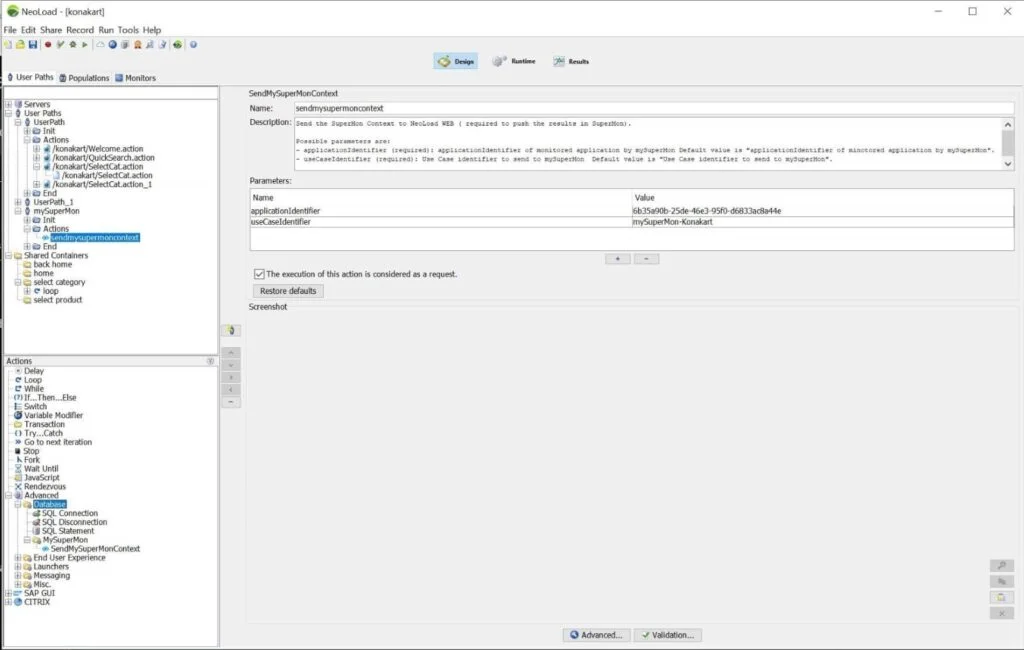

Paste that provided jar file to PROJECT_FOLDER/lib/extlib folder. You will see the new plugin in the NeoLoad GUI -> Action -> Database -> mySuperMon.

Now create a new user path by right-clicking on User Paths -> Create a User Path -> New User Path

It will open one popup, now enter plugin name “mySuperMon.” This will create new user path with the name “mySuperMon” along with three folders

Now drag and drop the SendMySuperMonContext to action.

That will set the context of your application.

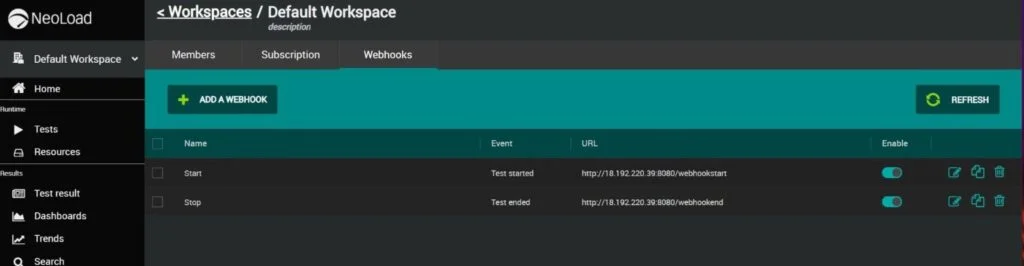

The webhooks start recording and stop recording calls.

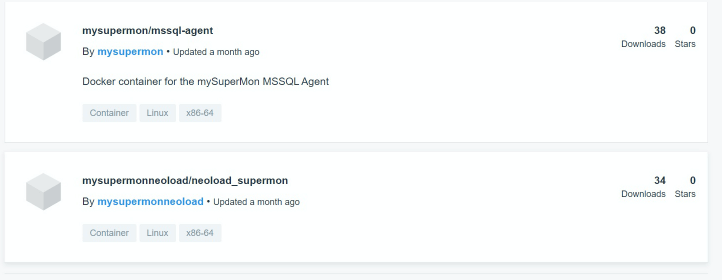

Webhooks communicate with Docker Hub, just search mySuperMn from Docker Hub.

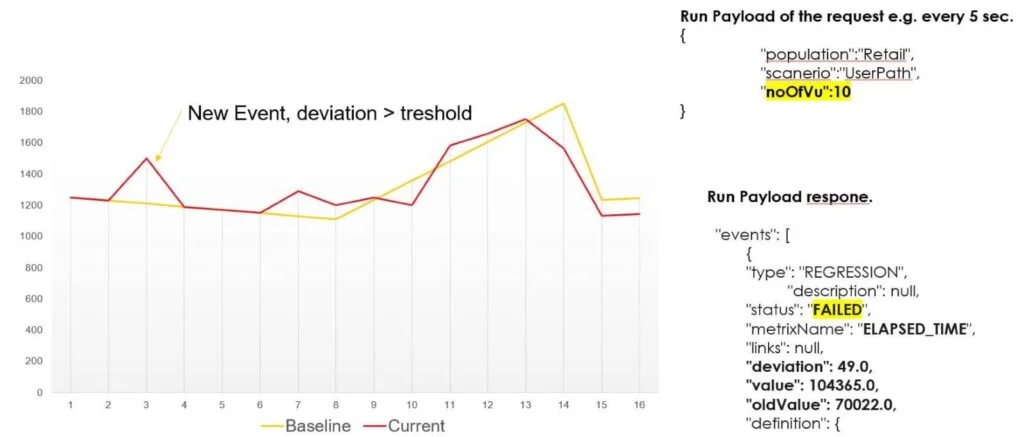

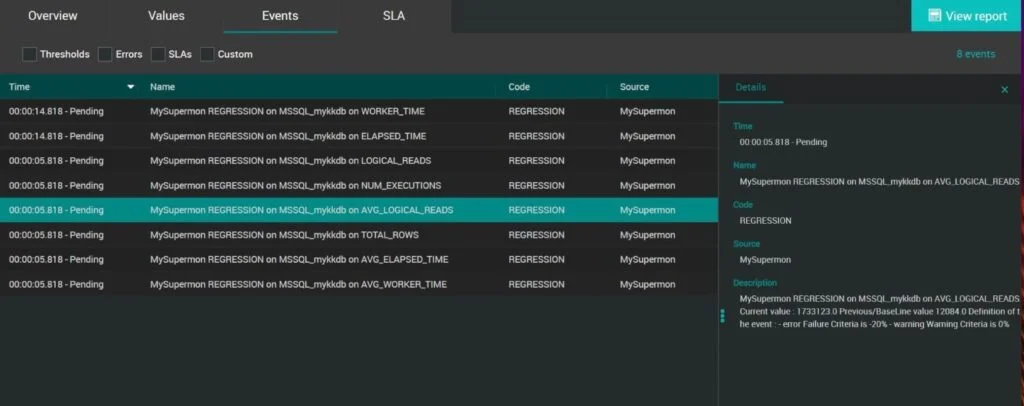

When all setup is done, there can be defined database metrics sent to NeoLoad SaaS, and mySuperMon is comparing execution to baseline run all the time.

And if the deviation is higher than is defined in the threshold, an event is created.

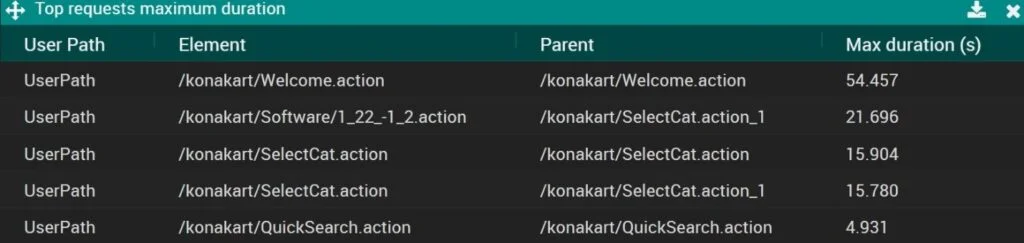

In Neoload SaaS users can see highest durations.

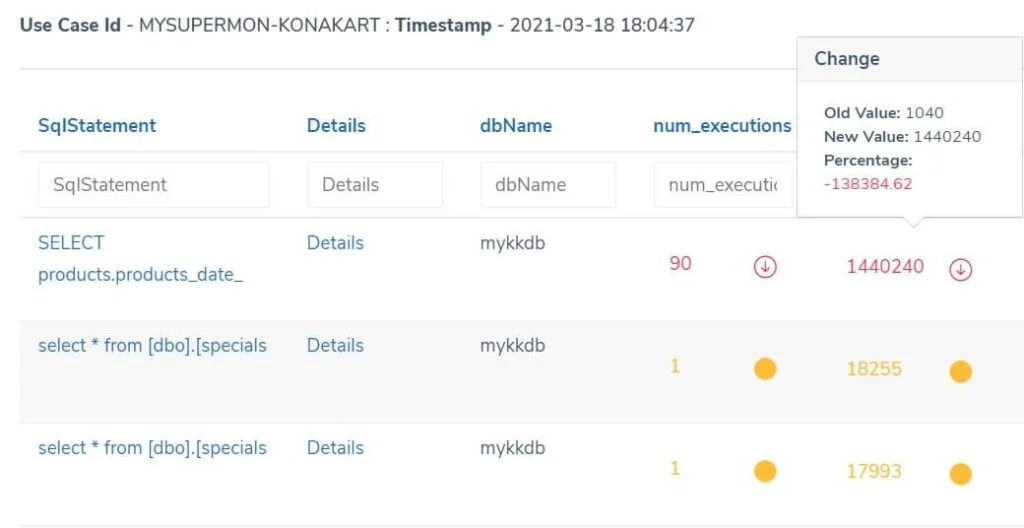

mySupermon provides a new innovation to comparing SQL statements to baseline run. Users can easily see what has changed from baseline, and performa root cause analysis fast.

Rows read value has increased a due new subquery:

old value 1040

new value 1440240

Yellow color means that queries were not present on baseline run.

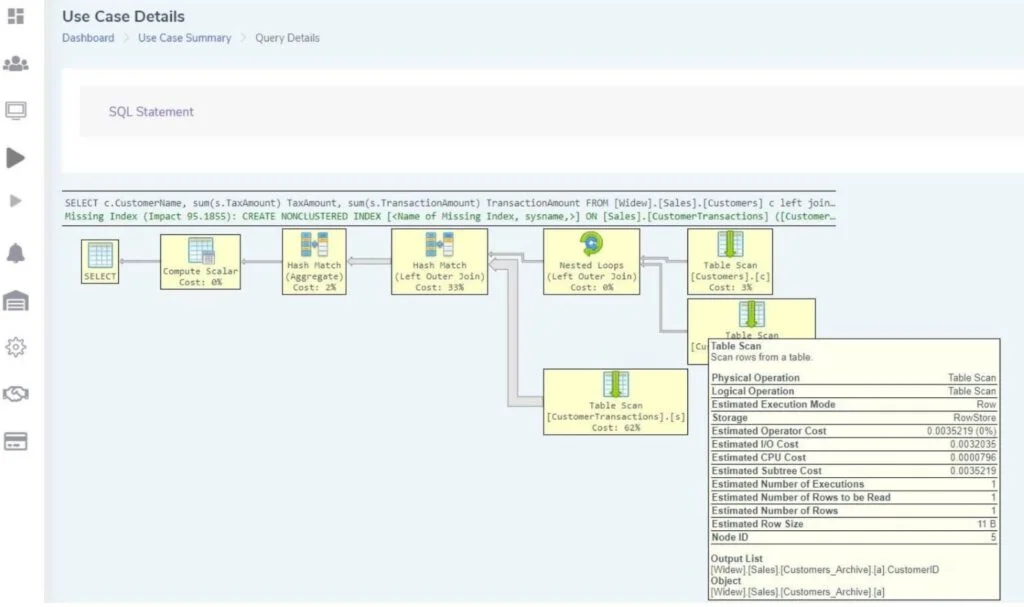

There is also a graphical explain available, and a suggestion to add a new index.

Too often, performance is only repaired afterwards when fires are put out, where the fire is. In addition to this, the indicators will reveal a lot more — for example, number of sorts, number of SQL statements failed, number of commits and rollbacks, time to wait for locks, etc.

Product analysis service gives you and your organization the following: The first time you run mySuperMon, you’ll see the performance status of the selected test cases. The product lists for you, for example, top10 best/worst performing use cases with comparison. Then mySuperMon catches those test cases where there have been changes compared to the previous test. A further analysis can still be carried out on poorly performing services, in which actual SQL statements can be monitored with even more accurate monitors.

From agentic AI to API simulation, Tosca’s cloud deployment is...

Learn how AI-driven quality intelligence transforms QA to test what...

Transform your manual testing workflow to deliver higher quality...

Join us for a preview of some of the most notable features on the...

Achieve continuous performance excellence — detect issues early,...

Learn to build a compelling business case for AI in software...