Agentic Test Automation in action

AI-powered testing assistant purpose-built for Tosca to enable...

How to verify data integrity across all data warehouse sources and target schemas, ETL processes, and business intelligence components.

Editor’s note: Wayne Yaddow is an independent consultant with over 20 years’ experience leading data migration/integration/ETL testing projects at organizations including J.P. Morgan Chase, Credit Suisse, Standard and Poor’s, AIG, Oppenheimer Funds, IBM, and Achieve3000. Additionally, Wayne has taught IIST (International Institute of Software Testing) courses on data warehouse, ETL, and data integration testing. He continues to lead numerous ETL testing and coaching projects on a consulting basis. You can contact him at wyaddow@gmail.com.

Data warehousing and business intelligence users assume, and need, trustworthy data.

In the Gartner IT Glossary, data integrity and data integrity testing are defined as follows:

Data integrity processes should not only help you understand a project’s data integrity, but also help you gain and maintain the accuracy and consistency of data over its lifecycle. This includes data management best practices such as preventing data from being altered each time it is copied or moved. Processes should be established to maintain DWH/BI data integrity at all times. Data, in its production state, is the driving force behind industry decision making. Errors with data integrity commonly arise from human error, noncompliant operating procedures, errors in data transfers, software defects, compromised hardware, and physical compromise to devices.

This blog provides a focus on DWH/BI “data integrity testing”—testing processes that support:

We cover how data integrity verification is addressed in each of the above categories.

Other categories of DWH/BI and ETL testing are not a focus here (e.g., functional, performance, security, scalability, system and integration testing, end-to-end, etc.).

Data Integrity is an umbrella term that refers to the consistency, accuracy, and correctness of data stored in a database. There are three primary types of data integrity:

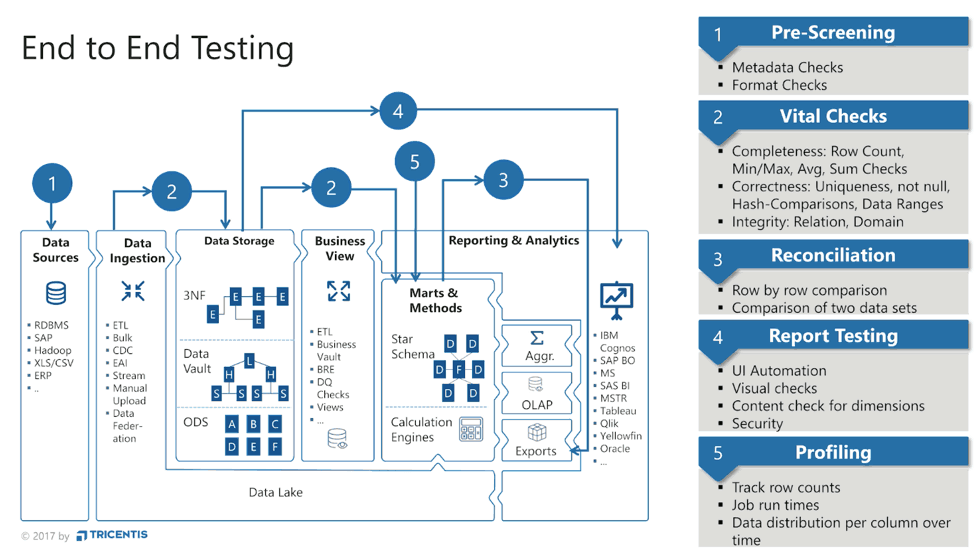

The framework in Figure 1 illustrates the major DWH/BI components that are generally tested in all categories of end-to-end DWH/BI testing. Data integrity testing often requires considerable time and resources.

Figure 1: General Framework for DWH/BI End-to-end Data Verifications

Requirements and schema-level tests confirm to what extent the design of each data component matches the targeted business requirements.

This process should include the ability to verify:

Schema quality represents the ability of a schema to adequately and efficiently project ‘information/data’. Schema in this definition refers to the schema of the data warehouse regardless if it is a conceptual, logical or physical schema, star, constellation, or normalized schema. However, this definition is extended here to include the schemas of all data storages used in the whole data warehouse system including the data sourcing, staging, the operational data store, and the data marts. It is beneficial to assess the schema quality in the design phase of the data warehouse.

Detecting, analyzing and correcting schema deficiencies will boost the quality of the DWH/BI system. Schema quality could be viewed from various dimensions, namely schema correctness, schema completeness, schema conformity, schema integrity, interpretability, tractability, understandability, and concise representation.

A properly-designed ETL system:

Data warehouse integrity testing and evaluations focus on ETL processes. Various functional and non-functional testing methods are applied to test the ETL process logic. The goals are to verify that valid and invalid conditions are correctly processed for all source and target data, ensuring primary and foreign key integrity (i.e., referential integrity), the correctness of data transformations, data cleansing, and application of business rules.

BI applications provide an interface that helps users interact with the back-end data. The design of these reports is critical for understanding and planning the data integrity tests.

Complex business rules are often applied to original source data then loaded to the data warehouse. The data warehouse information is then delivered in the form of BI dashboards/reports and is available for business executives to make crucial decisions with confidence. It is therefore essential to assure that data warehouse data (which is further processed in BI applications) is accurate. Those conducting BI application tests must prepare to answer the business teams’ questions: “Do these reports reflect the DW numbers correctly? If so, or if not so, how can we make sure we are looking at the right data to make the right decisions”?

The traditional approach for BI report testing has several flaws. Much time is often needed to identify and fix issues discovered in BI reports. The numbers on BI dashboards are often aggregated to a high level from the lower granularity of the DW. And this raw data is often transformed at each iteration through its journey to the dashboards. Choosing any value from a BI dashboard, then working to reconcile it against data warehouse data tables, is a complicated and tedious process.

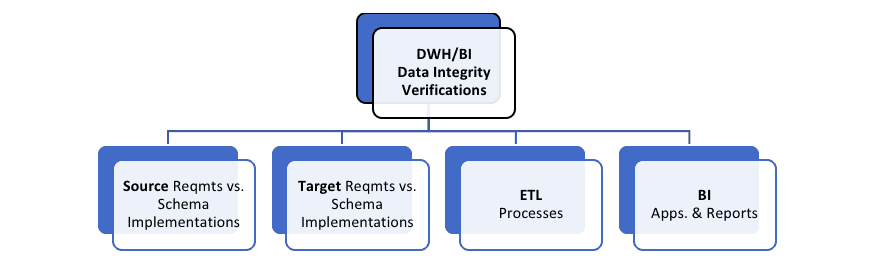

DWH/BI data integrity verification is categorized here as follows. Figure 2 shows a verification classification framework for the techniques applicable to sources and targets in data warehouse, ETL process, and BI report applications.

Figure 2: Framework for DWH/BI Data Integrity Verifications

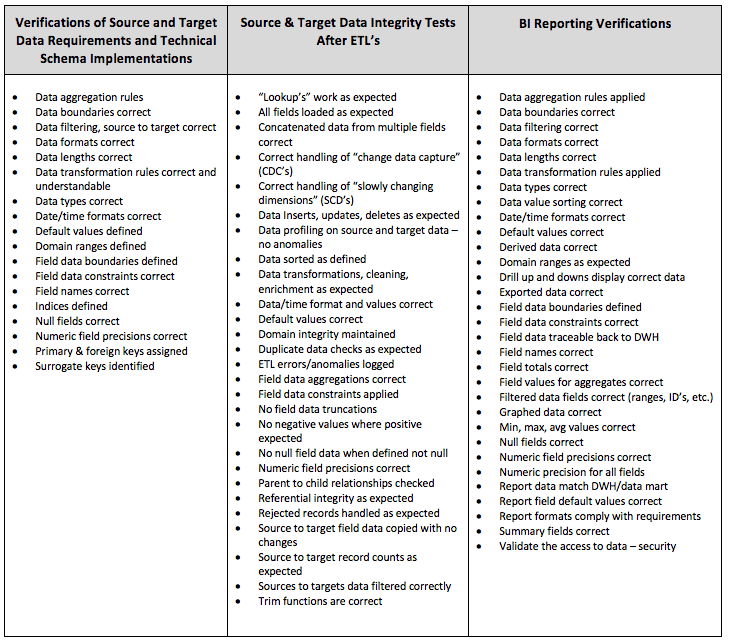

Figure 3: A Sampling of Verifications in the Three Categories of Data Integrity Testing: Schemas, ETL Processes, and BI Reports

AI-powered testing assistant purpose-built for Tosca to enable...

We’re extending our proven expertise in test automation with...

Learn how to build a Workday testing strategy that minimizes...

Connect NeoLoad with Datadog to unlock real-time observability and...

Learn how a top European bank drove Agile transformation, tackled...