Tricentis AI Workspace overview

Explore how Tricentis AI Workspace enables autonomous quality at...

Success with modeling performance tests doesn’t require technical skills – just the time to understand the ins and outs of the app. Check out our guide.

The objective of load testing is to simulate realistic user activity on the application. If a non-representative user journey is used, or if the appropriate load policy is undefined, the behavior of the app under load will not be able to be properly validated.

Modeling performance tests doesn’t require a technical skill set, just the time to fully understand the ins and outs of the application:

To fully understand the application during performance modeling, the following roles should be involved:

Of course, different functional roles will provide different feedback. The goal should be to understand the application, the end users’ habits, and the relation of the application to the current/future state of the organization.

System logs or database extractions can also be useful. These easily point out the main components and functional areas currently used in production. They also would retrieve the number of transactions/hour per business process.

Service Level Agreements and Service Level Objectives are the keys to automation and performance validation. The project leader and functional architect need to define ideal response times for the application. There are no “standard” response times.

SLAs/SLOs enable the performance engineer to provide status on performance testing results. With these, performance testing can be easily automated to identify performance regression between several releases of the application.

If the importance of component testing and performance testing in an early project stage is properly understood, the component-level SLA or SLO must be defined to enable successful automation.

Each test run will address one risk – and provide status on the performance requirements.

Most projects only focus on reaching an application’s limit. This is important to validate sizing and architecture configuration. However, it most likely will not answer all of the performance requirements.

There are other tests that need to run to properly to qualify app performance. These tests will help validate performance requirements:

Some other load tests could be utilized depending on the constraints of the business and the locations of the users. There are always events related to the company/organization that will affect the application load.

For example, the load designed for a trading application will depend on the opening hours of different markets. The app will have three typical phases: one for Asian users, a second for European users combined with additional Asian users, and the third for the U.S., which combines users from all three. Every time the markets open or close, there are peaks on trading activity. Therefore, testing would include different load types combining various business-case combinations.

On the other hand, there are also tests that are designed to validate the platform availability during maintenance tasks or a production incident:

Additionally, there may be operational situations to include during the load test to measure application behavior when the cache is being cleaned up.

There is yet another point that could affect the performance of the application: the data. Often when load testing, a test database with less data is used, or at least a database that has been anonymized for security purposes. The anonymous process usually creates records starting with the same letters/digits. So, all of the indexes used during the load test will be incomparable to those of the normal production database.

Data grows rapidly in production. Database behavior is quite different depending on the size of the database. If the lifetime of the database is long, then it might make sense to validate the performance of different sized databases to determine if there is the limit is different between a light and a heavy database. To achieve this, a large dataset pointing to various areas of the database should be used (e.g., never use a list of names where all the accounts start with AA or AAA2). Instead, keep a representative set of data from A to Z.

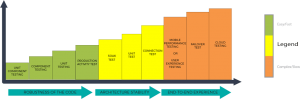

The type and complexity of the test will change during the project lifecycle:

An important element of performance design, think time is the time needed by a real user between two business actions:

Because every real-world user behaves differently, think times will always be different. Therefore, it is important:

Imagine the application is a castle with big walls and doors. Components that will be validated (via load testing) are inside the castle. The wall will represent proxy servers, load balancers, caching layers, firewalls, etc.

If a test is run without including think times, then only the main door would be hit. Okay, it may break, but there is a big chance that it would lock everything out after a short time. Be gentle and smart, and hide from the defense. The main door will allow entrance, and the components located inside of the castle will be able to be properly load tested.

As mentioned earlier, once the application is assembled, testing objectives will change. At some point, the quality of the user experience needs validation too.

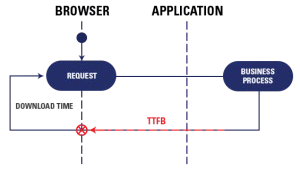

Mobile applications, rich Internet applications (RIA), and complex AJAX frameworks are challenging in the way most are used to measure response times. In the past, measurements have been limited to download time and time to first byte (TTFB).

This approach does not make sense because much of the rendering time that contributes to user experience depends on local ActiveX/JavaScript or native application logic. As such, testing measurements cannot be limited to TTFB and download time because the main objective is to validate the global user experience on the application.

Measuring the user experience is possible by combining two solutions: load testing software (Tricentis NeoLoad) and a browser-based or mobile testing tool.

The load testing tool will generate 98% of the load on the application. The browser-based or mobile-based testing tool will generate the other 2% of the load to retrieve the real user experience (including the rendering time) while the application is loaded.

This means that the business processes and transactions to be monitored by the browser/mobile-based solutions need to be carefully identified.

Running tests without monitoring is like watching a horror movie on the radio. You will hear people scream without knowing why. Monitoring is the only way to get metrics related to the behavior of the architecture.

However, many projects tend to lack performance monitoring due to:

Though monitoring is not limited to the operating system of the different server(s), its purpose is to validate that each layer of the architecture is available and stable. Architects took time to build the smartest architecture, so it is necessary to measure the behavior of the different layers.

Monitoring allows for a more comprehensive understanding of the architecture and the investigation into the behavior of the various pieces of the environment:

Many projects use production monitoring tools to retrieve metrics from the architecture. This approach is not recommended since production monitoring has a large granularity between each data point (every 2-5 minutes). In load testing, it is important to have monitored data collected at least every five seconds. The performance engineer needs to be given every opportunity to identify bottlenecks. When a peak appears for only a few seconds during a test, it is vital to have enough granularity to point out the bottleneck.

Monitoring requires technical requirements such as a system account, which services to be started, firewall ports to be opened, etc. Even if it seems difficult to meet those requirements, monitoring is possible if there is proper communication with Operations; sending them these requirements in advance will facilitate that communication.

A key takeaway for monitoring: Anticipate requirements at an early stage.

Next steps

This is the third article in a four-part series focused on practical guidance for modern performance testing:

Part 1 – A practical introduction to performance testing

Part 2 – Establishing a performance testing strategy

Part 3 – Modeling performance tests

Part 4 – Executing performance tests

The post was originally published in January 2018 and was most recently updated in July 2021.

Explore how Tricentis AI Workspace enables autonomous quality at...

From agentic AI to API simulation, Tosca’s cloud deployment is...

Learn how AI-driven quality intelligence transforms QA to test what...

Transform your manual testing workflow to deliver higher quality...

Join us for a preview of some of the most notable features on the...

Achieve continuous performance excellence — detect issues early,...