Tricentis AI Workspace overview

Explore how Tricentis AI Workspace enables autonomous quality at...

Most enterprise organizations are: guilty of both too few AND too many software tests – leaving them overexposed to risk and unable to quickly pivot

Like many Austrians, I enjoy “uphill skiing” — attaching skins to my skis, hiking up a mountain, perhaps taking a break for a comforting beverage, then gliding on down. This requires carrying a certain amount of gear. Even in low-risk situations, you need a pack with your goggles, a first aid kit, a map, extra insulation layers, water, some food, and maybe a small headlamp. For a more remote/rugged location, you probably want to increase your food supply, plus add in an avalanche transceiver, shovel, snow probe, and the like.

It’s a delicate balancing act. You don’t want to skip something that you might really need — in case you get caught in an avalanche, for example. But, if you bring too much, you won’t be able to achieve the desired speed and agility. In the worst-case scenario, you have a heavy pack stuffed with too much of one thing but not enough of another — leaving you simultaneously unprepared and overloaded.

That’s where most enterprise organizations stand with respect to software testing: guilty of both too few AND too many tests, overexposed to risk and unable to pivot fast. Let me explain…

Most “bespoke” custom applications are actually overtested. Overtesting is especially prevalent when testing is outsourced to a service provider whose payment depends on how many tests are defined and automated. It happens all too often, and it leads to companies overpaying for testing that yields limited business value.

When testers are measured by the quantity of tests they produce, you tend to get way more tests than you actually need — and the release is often delayed as all these tests are being created, executed, and updated. This is one of the main reasons why testing is commonly cited as the #1 bottleneck in the application delivery process. Even worse, you usually don’t end up with the right tests. You just get a lot of tests that check the same thing over and over.

How do you fix this? Adopt a new currency for testing: focus on business risk coverage instead of the number of test cases.

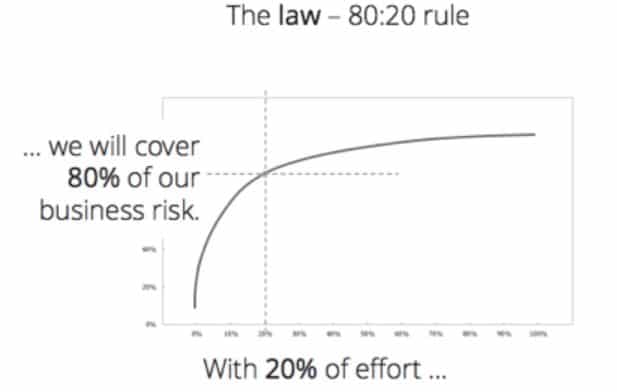

You’ve probably heard of the 80/20 rule (i.e., the Pareto principle). Most commonly, this refers to the idea that 20% of the effort creates 80% of the value. The software development equivalent is that 20% of your transactions represent 80% of your business value… and that tests for 20% of your requirements can cover 80% of your business risk.

Even if testers are truly trying to cover your top business risks (vs. just create a certain number of tests), getting the right tests is not easy. Working intuitively, most teams achieve only 40% risk coverage, and end up accumulating a test suite that has a high degree of redundancy (a.k.a. “bloat”). On average, 67% of tests don’t contribute to risk coverage—but they make the test suite slow to execute and difficult to maintain. Returning to our initial analogy, this is like loading up your backpack with nothing but water. It’s more water than you’d ever feasibly need in the snow, and that water is taking up space and weight that would be better allocated to essentials like food and an extra insulation layer.

If “the business” gives testers an accurate assessment of how application functionality maps to business risk, the testers can cover those risks extremely efficiently. This is a huge untapped opportunity to make testing both faster and more impactful. If testers understand how risk is distributed across the application and know which 20% of the transactions are correlated to that 80% of the business value, it’s entirely feasible to cover the top business risks without an exorbitant testing investment.

Once it’s clear what really needs to be tested, the next step is determining how to test it as efficiently as possible. Just as all requirements are not created equal (from a risk perspective), the same holds true for the tests created to validate those requirements. A single strategically-designed test can achieve as much, if not more, risk coverage than 10 other tests that were “intuitively” designed for the same requirement.

This is where test case design methodology comes in. With an effective test case design strategy such as linear expansion, testers can test the highest-risk requirements as efficiently as possible. They are guided to the fewest possible tests needed to 1) reach your risk coverage targets AND 2) ensure that when a test fails, the team knows exactly what application functionality to investigate.

When it comes to tests for custom apps, less is more. More value to the team and stakeholders. More time to spend getting the tests implemented on time so you can release on time. And more agility when the project changes course and the test suite needs a major update.

Packaged apps like SAP and Salesforce fall on the opposite end of the overtesting-undertesting spectrum. What’s the most common strategy for testing an SAP enterprise application change — be it a custom update, a service pack, or an emergency fix? Don’t test the change at all!

Well, maybe that’s not entirely accurate. Most organizations do some testing of their packaged applications. When an update needs to be deployed into production, they make their key users test it.

But guess what? Key users don’t like to test. Testing packaged app updates is often a lengthy, manual ordeal. Business process tests may be outdated — or worse, undocumented — making the exercise frustrating and error-prone. And these testing duties are piled on top of key users’ already busy schedules. Some key users have admitted to me that, to hurry things along, they primarily test use cases that they know will pass. Their strategy: “We test for green!”

None of this is news to operations teams, of course. To deal with the lack of effective pre-release testing, they routinely add a “hypercare” phase immediately after the go-live of an update. Hypercare is an “all hands on deck” period during which the organization’s most expensive resources (typically developers and project staff) are put on standby to fix emergency issues as they pop up in production. To be clear — these are issues that were not discovered in pre-release testing, when they would have been significantly easier and cheaper to fix! Because hypercare phases are so common — and so costly — there are companies that specialize in providing hypercare support to customers.

More than 90% of SAP enterprise customers opt for this deployment strategy, which is as lengthy as it is expensive. Key user testing typically lasts one to two weeks. A hypercare phase can last up to three months, during which much of the burden caused by defects is felt by — you guessed it — those poor key users.

For the majority of organizations that rely on key user testing+hypercare to test their packaged apps, I have bad news and I have good news. First the bad news. SAP (as well as other packaged app vendors) will be delivering updates much more frequently than ever before. This means customers must find ways to keep up.

Now the good news. There is a better alternative to key user testing and hypercare: change impact analysis. Change impact analysis analyzes your entire SAP ecosystem — overnight — and reports.

In short: it will help you zero in on exactly what needs to be tested. You’ll have the tests you need to survive, but you won’t end up burdened by more tests than you really need.

There is, however, one caveat with respect to change impact analysis. Given the complexity of large packaged apps, a change in a certain central component could potentially affect many objects, which will be shown as “impacted.” This diminishes the technique’s effectiveness. To lighten your testing load, narrow down the tests further, taking the object dependencies into consideration (focusing on the “most-at-risk” objects).

Modern applications ingest, integrate, and transform massive amounts of data. There are countless opportunities for data to become compromised and usually few (if any) formalized processes to ensure data integrity across the complete data landscape.

Maybe you’ve managed to achieve just the right amount of testing across all the custom apps and packaged apps that collect data and transform it into valuable information. But how often, and how thoroughly, is the underlying data being tested? Every other component of your system might look like it’s performing exactly as expected, but if the data is off, the business need is not being met. In fact, the business is being placed at extreme risk.

Assume a packaged application update introduces a subtle change to data formats — a change that prevents 1 out of every 100,000 records from being processed. Would you know immediately, or would it go unnoticed until an irate customer (or a fastidious regulator) came calling? What if your organization introduces a new field (e.g., for COVID-19 test status), then a month later one team’s bug fix ends up overwriting that status whenever the user profile is updated?

With automated “quality gates” constantly checking the data as it enters and moves throughout your applications, you could catch such issues as they’re introduced. You could also eliminate them before they impact the business and require a massive amount of manual data inspection/repair.

So far, financial, insurance, and healthcare companies have been leading the charge with automated end-to-end data testing. They’re achieving some remarkable results — like the ability to automatically test 200 million values in just 20 minutes. If the applications you’re working on consume, manipulate, or output data (and whose aren’t?), don’t leave this essential behind. Without it, subtle data issues can rapidly snowball into a crisis that you’d need quite an avalanche shovel to dig out of.

NOTE: This content originally published in The New Stack

Explore how Tricentis AI Workspace enables autonomous quality at...

From agentic AI to API simulation, Tosca’s cloud deployment is...

Learn how AI-driven quality intelligence transforms QA to test what...

Transform your manual testing workflow to deliver higher quality...

Join us for a preview of some of the most notable features on the...

Achieve continuous performance excellence — detect issues early,...