Tricentis AI Workspace overview

Explore how Tricentis AI Workspace enables autonomous quality at...

How applying DevOps-style test automation to DWH/BI and other data integration projects can guarantee a high level of data quality

Editor’s note: Wayne Yaddow is an independent consultant with over 20 years’ experience leading data migration/integration/ETL testing projects at organizations including J.P. Morgan Chase, Credit Suisse, Standard and Poor’s, AIG, Oppenheimer Funds, IBM, and Achieve3000. Additionally, Wayne has taught IIST (International Institute of Software Testing) courses on data warehouse, ETL, and data integration testing. He continues to lead numerous ETL testing and coaching projects on a consulting basis. You can contact him at wyaddow@gmail.com.

Gartner recently stated that between 70 and 80 percent of business intelligence initiatives initially end up failing, but many resume due to their importance to the organization. As businesses create (and need) more data than ever before, the sheer number of BI failures threatens to grow exponentially. This could have a far-reaching impact on the underlying digital transformation initiatives that these BI projects are designed to enable.

Given that companies are releasing new applications faster than ever—some releasing updates on demand and multiple times per day—too many organizations are using manual ETL test processes and the wrong tools to manage critical parts of releases for highly visible, often customer-facing applications. That translates into risk: risk to customer loyalty, the brand, confidential data, and critical business decisions.

This blog explores how applying DevOps-style test automation to DWH/BI and other data integration projects can guarantee a high level of data quality—instilling the trust that is essential for the success of BI projects and the digital transformation initiatives that are ultimately driving them.

DevOps, with its focus on tool automation across the entire development lifecycle, addresses an enormous challenge for “big data” and DWH/BI developers. Many of today’s big data and DWH/BI projects are already leveraging (or actively planning to adopt) Agile and DevOps processes—but not so much for testing. DWH/BI projects, in general, are not currently using automated testing tools to the extent that is needed for project successes. Perhaps this is because they believe the required testing functions are not commercially available, or are too complex and expensive to develop in-house.

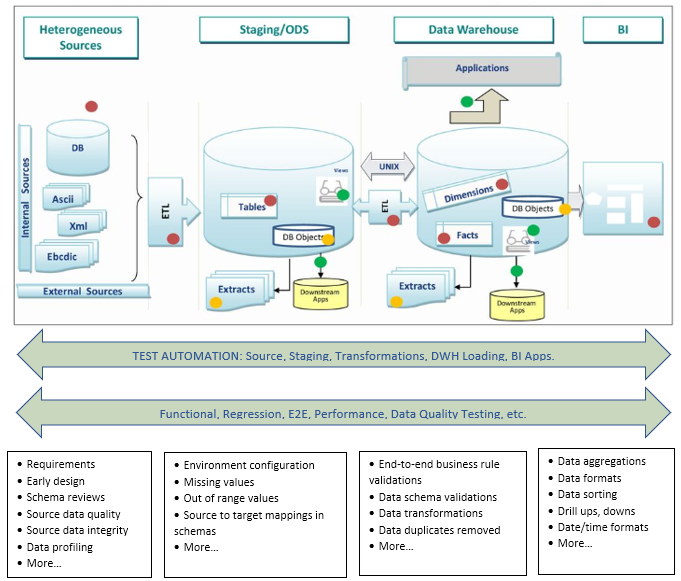

When thinking about what needs to be tested to ensure data integrity, it’s important to consider that BI is more than just data warehouses (DWH) and ETL (Extract Transform Load). Services between the ETL processes, as well as the middleware and dashboard visualizations, also come under the purview of BI. Messages and negotiating pacts between these layers is complex and requires much coordination and testing.

DevOps helps facilitate this with constant deployments and testing. Implementing a DevOps testing approach to DWH/BI means automating the testing of different source and target data sets to keep data current. This can be tremendously beneficial when handling many diverse data sources and volumes—for some projects, hundreds. Your team will be able to detect errors before they threaten BI applications in production. Moreover, you will have more time to fix issues before reaching production.

Continuous quality is a systematic approach to achieving the quality goals of development and the businesses it supports. In the 2018 Magic Quadrant for Software Test Automation, Gartner states: “Test automation tools are essential elements of a DevOps toolchain and enablers for achieving the continuous quality approach required for successful DevOps.”

Test automation is equally essential for guaranteeing a high level of data quality. The more we test, the more bugs will be resolved before going live. This is especially crucial for business intelligence projects. When the users can’t trust the data, it’s likely that the BI solution itself will not be trusted…and fail.

As mentioned earlier, ETL testing is primarily conducted manually, which makes it a very labor intensive and error prone process. Automating ETL tests allows frequent smoke and regression testing without much user intervention and supports automated testing on older code after each new database build. Automation can not only help execute tests; it can also assist with designing and managing them.

The decision to implement automated tools for ETL testing depends on a budget that supports additional spending to meet advanced testing requirements. It is important to remember that test tools built and maintained in house are better than no test automation at all. In the end, test automation will save much time. Additionally, business users will appreciate the quality of BI deliverables and accept the data from the Data Platform solution as the “single version of the truth.

Figure 1: A sampling of tests and validations that should be considered for most DWH/BI projects

To learn about the most highly-recommended test automation planning steps for DWH/BI projects and which ETL and DWH/BI verifications are best for automation, read the complete white paper: BI/DWH Testing: Why Automation is Imperative

Explore how Tricentis AI Workspace enables autonomous quality at...

From agentic AI to API simulation, Tosca’s cloud deployment is...

Learn how AI-driven quality intelligence transforms QA to test what...

Transform your manual testing workflow to deliver higher quality...

Join us for a preview of some of the most notable features on the...

Achieve continuous performance excellence — detect issues early,...